Motion Capture

Work responsibilities

As a Motion Capture Studio Assistant at NYU under Professor Todd J. Bryant, head of Production and Technology at NYU, I had the opportunity to contribute in the following technical areas:

1) Studio Calibration:

I calibrated the studio using the Optitrack Motion Capture System, ensuring accurate tracking and data acquisition.

2) Motion Capture Setup:

I assisted in setting up and calibrating the motion capture systems, including cameras, markers, and other necessary equipment for capturing and recording motion data.

3) Touch OSC Integration:

I utilized Touch OSC for specific client projects, integrating it with the motion capture workflow.

4) Performance Capture:

I collaborated with actors and performers, capturing their movements and performances using motion capture technology. I ensured precise data acquisition and maintained quality control throughout the capture process.

5) Data Processing:

I cleaned and processed motion capture data by removing noise, calibrating the data, and preparing it for use in animation or other applications.

6) Asset Integration:

I integrated motion capture data into the studio’s animation pipeline or game engine, ensuring proper alignment with character rigs or virtual environments.

7) Technical Troubleshooting:

I identified and resolved technical issues related to motion capture equipment, software, or data. This included addressing marker occlusion, camera calibration, and data synchronization problems. I also fixed motion capture glitches in Maya.

8) Documentation and Reporting:

I maintained accurate records of motion capture sessions, documented procedures, and reported any issues or improvements to the motion capture workflow or equipment.

9) Collaboration and Communication:

I worked closely with other members of the motion capture team, such as supervisors, animators, and technical directors, to ensure smooth integration of motion capture data into the production pipeline.

10) Research and Development:

I stayed updated with the latest advancements in motion capture technology and techniques. I explored new tools and methodologies to enhance the quality and efficiency of motion capture processes.

11) Quality Control:

I conducted thorough reviews and evaluations of motion capture data to ensure accuracy, consistency, and adherence to project requirements and artistic vision.

12) Workflow Optimization:

I identified opportunities for workflow improvements and implemented best practices to streamline the motion capture pipeline, increasing efficiency and productivity.

13) Training and Support:

I assisted in training sessions for actors and team members involved in motion capture processes. I also provided technical support and troubleshooting assistance as needed.

14) Adherence to Production Deadlines:

I worked within project schedules and met assigned deadlines for motion capture deliverables, ensuring the production pipeline remained on track.

15) Laundering Mocap Suits:

I took responsibility for laundering motion capture suits to maintain cleanliness and hygiene.

Additionally, I supported Professor Todd J. Bryant’s students in the Amusement Park and Virtual Production classes by collecting, tracking, and analyzing motion capture data while maintaining consistency and quality. Furthermore, I had the opportunity to participate in the construction of two new motion capture studios at NYU. For one of the studios, I was involved in tasks such as setting up the server, managing cables, and installing pole mounts and infrared cameras from scratch.

Building a mocap studio from ground up

PROJECT – SUNJAMMER 6: A TALE BLOWN BY A SOLAR BREEZE BY TONI DOVE

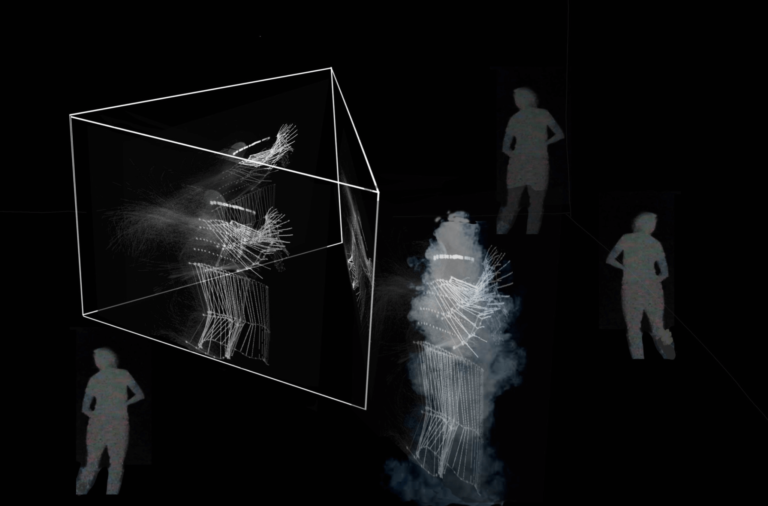

During my work experience, I had the privilege of collaborating with Toni Dove to assist her in training her AI model using motion capture data. Toni’s project is a unique fusion of a visual novel, a movie, and an engine that weaves compelling narratives. As an instrument builder, Toni creates human-operated machines comprising hardware, software, and media, which serve as tools for storytelling. These machines disrupt the conventional notions of time, space, and narrative patterns, seeking to explore new immersive rhythms and dissolve the boundaries between audience and performer, as well as analog and digital realms.

In this project, the focal point is Hypatia, an AI with a narrative agenda. Hypatia’s aim is to establish a connection with viewers, enticing them to listen to her story and delve into a world inhabited by Kepler Redux and a chorus of characters. The core aspect of Hypatia lies in her ability to respond to the body language, movements, and specific gestures of multiple viewers. She can interpret the number of people in the room and analyze different types of movement or motivations, responding accordingly with what appears to be a complex personality.

Sketch of the installation layout: A triangular translucent scrim, projections on 3 8’x10’ sides. 4 vocal actors – 2 main characters and a chorus. Voices are spatialized to the projected characters in the room within an immersive sound environment. Hypatia leaves the scrim and inhabits a steam cloud at the tip of the triangle to converse with Kepler Redux. Viewers move around the triangle engaging with characters as their story unfolds. Steam screen optional depending on requirements of venue.

This innovative format combines elements of installation, cinema, and performance, catering to viewers who navigate and move within the space. Multiple versions of the project exist, designed for both linear performances and infinity loop installations. Working alongside Toni Dove in this endeavor, I contributed to the training of the AI model and helped shape this captivating and immersive experience for the audience.

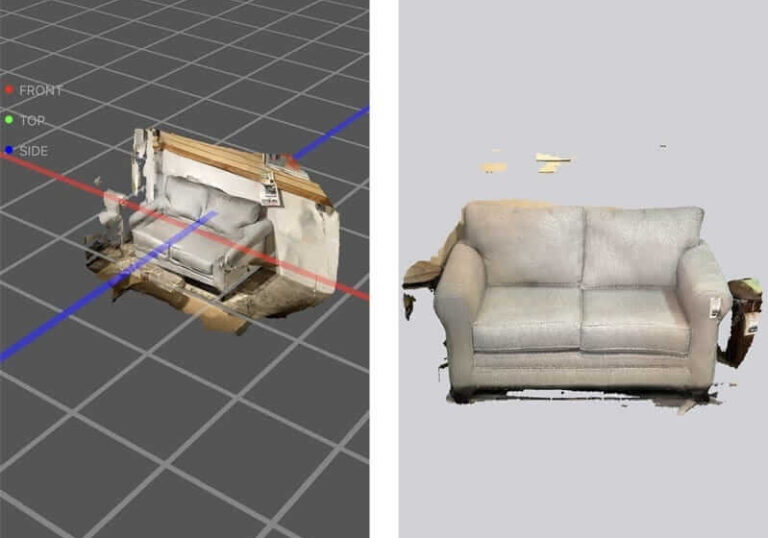

PROJECT – LIVE MOTION CAPTURE THEATRICAL AT WHITNEY MUSEUM OF ARTS BY TERENCE NANCE

As a Motion Capture Associate for the project “Live Motion Capture Theatrical” at the Whitney Museum of Art by Terence Nance, my responsibilities extended beyond recording, processing, and maintaining motion capture data. I incorporated lidar using Polycam app to scan objects in real-time and seamlessly import them into the Unreal Engine scene using blueprints. I utilized Touch OSC to introduce live objects and change scenes in real-time, enhancing the interactive nature of the performance. This integration allowed for dynamic and immersive experiences, merging the physical and virtual worlds. By leveraging these advanced techniques and technologies, I contributed to the creation of a captivating and cutting-edge live motion capture theatrical experience at the Whitney Museum of Art.