Volumetric Capture

eXPERIENCE:

I had the opportunity to set up a 12′ x 12′ volumetric studio at NYU’s Rlab, thanks to Todd J. Bryant. While we received significant support from the Evercoast Maverick team, I took the initiative to conduct research and development to address areas of knowledge gaps. This included figuring out the optimal lighting techniques for the volumetric capture space, determining the appropriate settings to modify in the Evercoast Maverick software, and acquiring the necessary expertise to effectively use Arcturus HoloEdit for editing the processed data. Through independent exploration and problem-solving, I bridged these knowledge gaps, enabling us to achieve optimal results in the project.

PROCESS:

The setup involved the following technical steps:

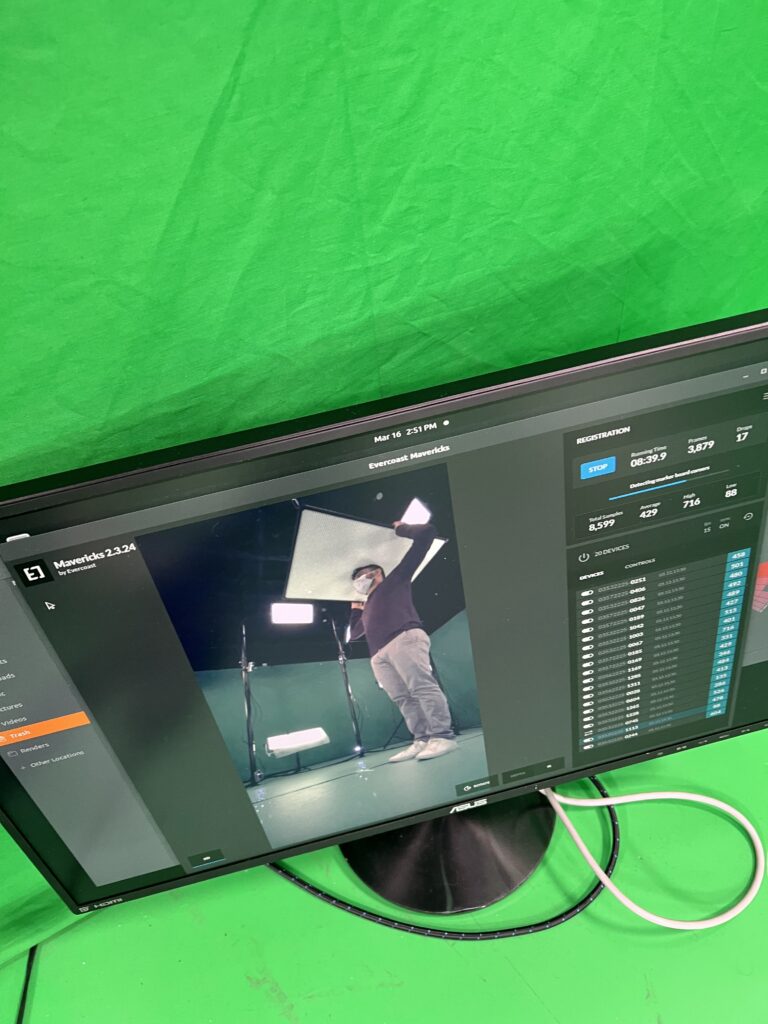

Depth Sensor Cameras: I installed depth sensor cameras within the studio space. The setup involved placing depth sensor cameras at three different heights with defined angles. The topmost camera was angled 22.5 degrees downwards, the middle cameras were angled 9.5 degrees, and the bottommost cameras were angled 22.5 degrees upwards.

Lighting Setup: I attached lights on poles strategically placed around the studio to ensure proper lighting conditions for the volumetric capture. This step involved positioning the lights for optimal illumination of the subject.

Building Lighting Calibration: I calibrated the lighting setup within the studio to ensure accurate color representation and consistency across the capture environment. This step involved adjusting light intensity and color temperature.

Camera Calibration: I calibrated the depth sensor cameras to accurately capture the volumetric data. This step involved aligning the cameras and fine-tuning their settings to capture precise depth information.

Evercoast Maverick Software: I used the Evercoast Maverick software to record a short 6-second footage of the subject within the volumetric studio. This software allowed for real-time capture and processing of volumetric data.

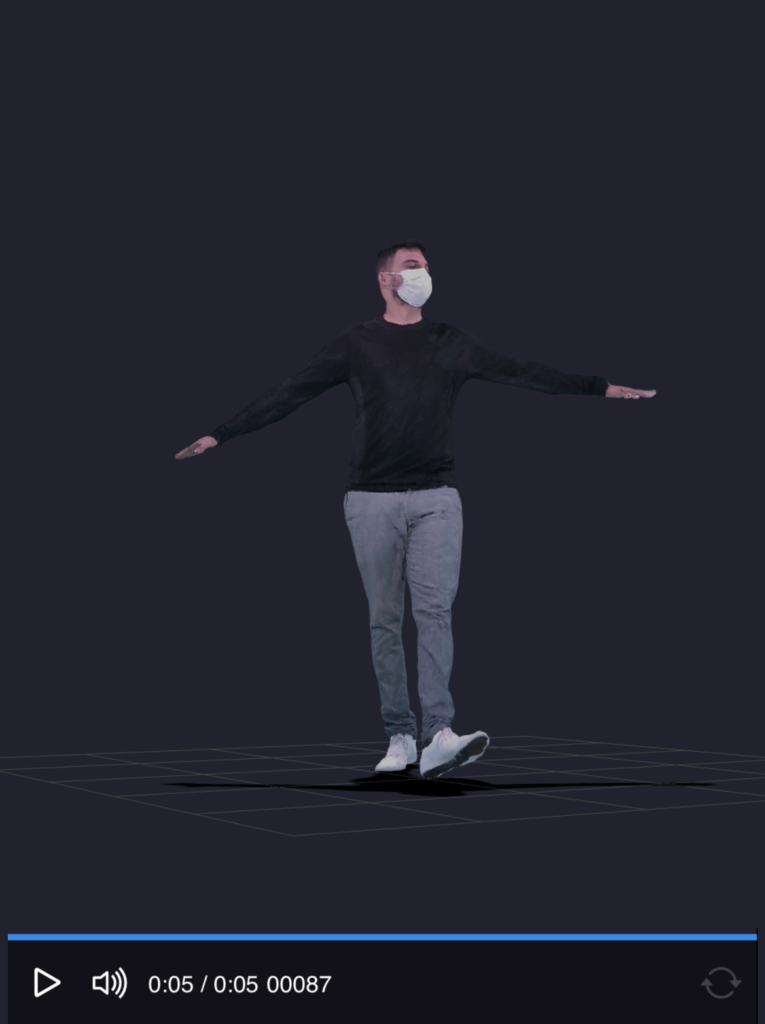

AWS Server Processing: After capturing the footage, I processed the volumetric data on an AWS server. This involved leveraging the computational power of the cloud to handle the large amount of data generated by the volumetric capture.

Footage Editing: Once the processing was complete, I imported the footage into Arcturus Studio’s HoloEdit software. In HoloEdit, I edited the volumetric footage, making adjustments, applying effects, and refining the overall visual quality.

In my role as a studio graduate assistant, I provided assistance to several students working on their thesis projects involving volumetric capture for performance pieces. I supported them by offering guidance, technical expertise, and hands-on assistance throughout the process. This involved helping them set up the volumetric capture equipment, calibrating the cameras, ensuring proper lighting conditions, and overseeing the overall capture process. Additionally, I provided support during post-production stages, including data processing, editing, and refining the captured volumetric footage. My contribution aimed to facilitate the successful realization of the students’ thesis projects and enhance their understanding and utilization of volumetric capture technology for immersive performance experiences.